How to Connect and Control the Micromelon Rover with OpenMV

The Micromelon Rover comes with an expansion header that allows for the connection of various additional devices. In this guide, we will show you how to connect an OpenMV and use it to control the Rover via the UART. Our goal is to have the Rover detect objects using the OpenMV and drive towards them.

If you're not familiar with UART, please refer to the “How to use UART” guide:

Hardware Setup

For the hardware setup, you will need to connect the Rover’s RX and TX pins to the OpenMV. You will also need to connect the 3.3V and GND pins to power the device. You can find the Rover’s header pinout and an example wiring diagram below:

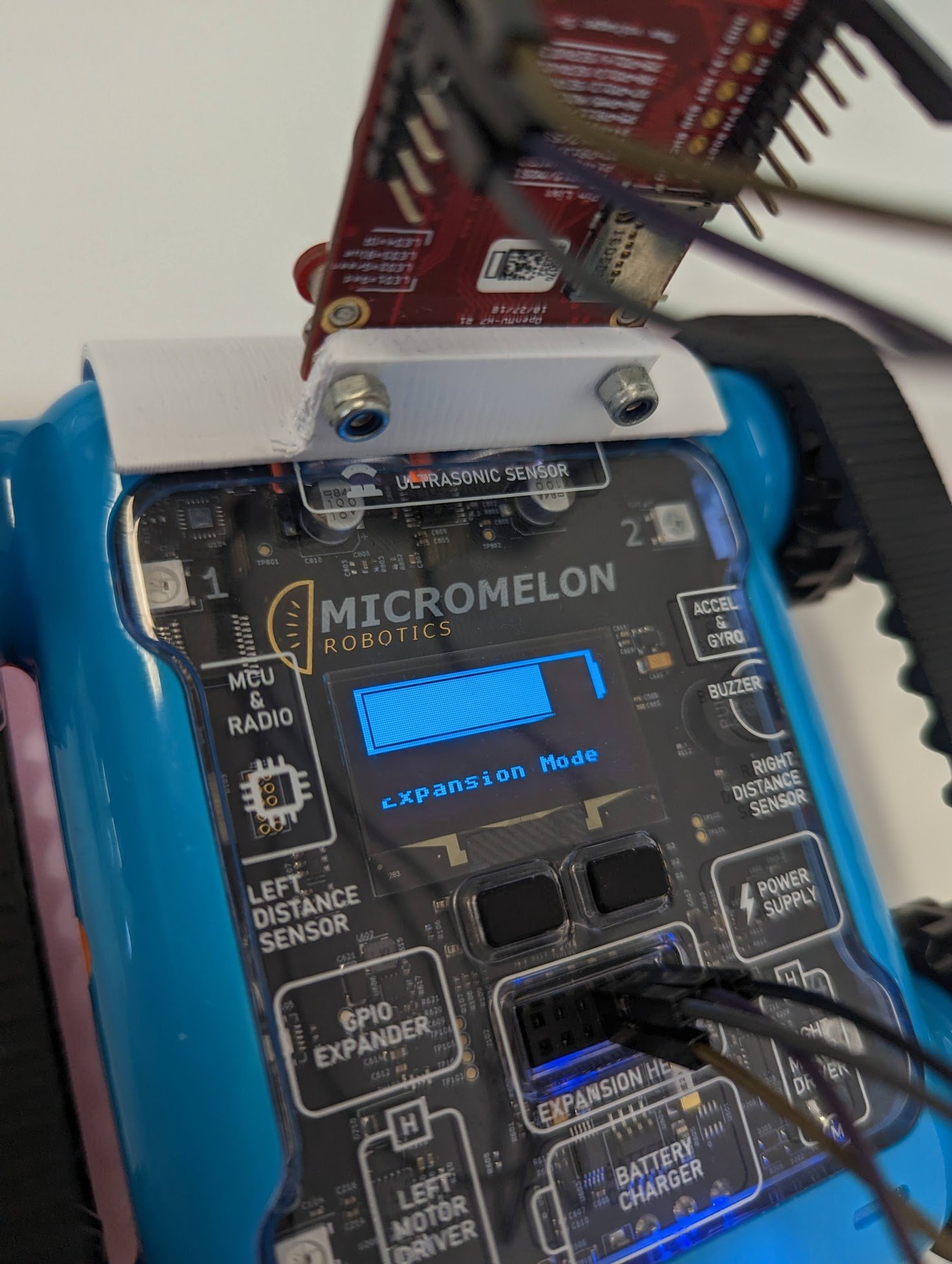

To attach the OpenMV to the rover, head over to Printables or Thingiverse, and download the OpenMV clip for the rover. To assemble, simply use 2 M3 (8 - 10mm), and screw the OpenMV onto the clip. Then, click the clip onto the front of the rover.

Rover UART Initialisation

When programming the OpenMV, we recommend using the OpenMV IDE. It has built-in camera calibration functionality and makes it easy to flash the OpenMV. Since the OpenMV is programmed using MicroPython, you can store all the code for this project in a Python file inside the IDE. The MicroPython documentation provides a good overview of many of the libraries that will be used.

Whenever you're working with OpenMV Python files, the code has three key elements. The first step is to import any necessary libraries, constants, and global variables. By default, the sensor, image, and time libraries will be imported. You will also need to import the pyb library, which gives you the ability to use specific device functionality, such as sending UART packets.import sensor, image, time from pyb import UART roverSerial = UART(3, 115200, timeout_char=1000)

The next step is to set up the Rover to receive UART packets by setting it to "Expansion Mode." To do this, you will need to send a UART data packet in the following format:

| Start Byte | Operation Byte (Read/Write) | Register Byte | Data Length Byte | Data |

|---|---|---|---|---|

| 0x55 | 0x01 (Writing) | 26 | 1 | 1 (Bluetooth Mode) |

To make it easier to send future Rover commands, you should create packet building and sending functions. This way, you can call the appropriate command in the main loop.

def build_and_send_packet(operation, command, data=None): if data is None: data = [] packet = [0x55, operation, command, len(data)] packet.extend(data) return packet

This can then be used to arm the Rover:

def arm_rover(state): arm_packet = build_and_send_packet(1, 26, [state]) roverSerial.write(arm_packet) time.sleep(0.25)

Uploading this code to the Arduino should cause the Rover to enter the correct mode. You will know it worked if the Rover's LCD reads "Expansion Mode" where the bot ID used to be.

OpenMV Setup

Now that the Rover is ready to receive commands over UART, let's set up the OpenMV. When mounting the OpenMV for object tracking, a slight downward angle is useful. You can use the Micromelon 3D printed mount for this purpose.

If the OpenMV is positioned upside down, then the image should be flipped accordingly:

sensor.set_vflip(True) sensor.set_hmirror(True)

You will also need to define the clock to ensure that each snapshot taken can be cycled through.

clock = time.clock() # define the clock while(True): click.tick() img = sensor.snapshot()

Running the current code should enable the Rover’s UART expansion header and show the camera’s output inside the OpenMV IDE.

import sensor, image, time from pyb import UART roverSerial = UART(3, 115200, timeout_char=1000) def build_and_send_packet(operation, command, data=None): if data is None: data = [] packet = [0x55, operation, command, len(data)] packet.extend(data) return packet def arm_rover(state): arm_packet = build_and_send_packet(1, 26, [state]) roverSerial.write(bytearray(arm_packet)) time.sleep(0.25) time.sleep(0.5) arm_rover(True) sensor.reset() sensor.set_pixformat(sensor.RGB565) sensor.set_framesize(sensor.QVGA) sensor.skip_frames(time = 2000) sensor.set_vflip(True) sensor.set_hmirror(True) clock = time.clock() # define the clock while(True): clock.tick() img = sensor.snapshot() print(clock.fps())

Sending Rover Commands

Once the Rover is in expansion header mode, you can send commands to it.

One of the most useful commands to know how to send to the Rover is the move motors command. Similar to the expansion mode command, the motor movement packet is constructed as follows:

| Start Byte | Operation Byte (Read/Write) | Register Byte | Data Length Byte | Data |

|---|---|---|---|---|

| 0x05 | 0x01 (Writing) | 0 | 2 | Left speed, right speed |

Using this, we can send a motor movement command to the rover using the packet build and send function we made earlier. For this example, we will assume that we want to give in a speed from -30 to 30. Note that the speed needs to be scaled and then encoded as a signed binary number before stored in a packet and sent over UART.

def write_motors(leftSpeed, rightSpeed): lSpeed = int(leftSpeed*(127/30)) rSpeed = int(rightSpeed*(127/30)) # 127 = full speed # 0 = 0 # 255 = full backwards if (lSpeed < 0): lSpeed -= 254 if (rSpeed < 0): rSpeed -= 254 packet = buildAndSendPacket(1, 0, [lSpeed, rSpeed]) uart.write(bytearray(packet)) time.sleep(0.1)

Object Tracking

Once we have set the threshold, we can proceed with tracking objects of this color using OpenMV's built-in blob detection. This process involves multiple steps and is executed in the main loop. Here's an overview of the steps:

Next, adjust the thresholds until the tracked color is clearly defined in the binary image. Copy and store the LAB Threshold values in a variable for use when the Rover is armed. Here is an example of these values:

threshold = (26, 55, -73, 14, -40, 15)

Using this threshold, we can then track objects of this colour using OpenMV’s built in blob detection. This is a multi step process which is executed in the main loop.

Take a camera snapshot.

Apply thresholding to identify all the blobs that match the defined color.

Since the largest blob is likely the object being tracked, save its position

These coordinates can then be used to adjust the Rover based on the object’s position in the snapshot.

To initialise the camera, the following code can be used.

sensor.reset() # Reset and initialise the sensor sensor.set_pixformat(sensor.RGB565) # Set the pixel format to RGB565 or GRAYSCALE sensor.set_framesize(sensor.QVGA) # QVGA is 320x240 sensor.set_windowing((framew, frameh)) # Window size set to 240x240 sensor.skip_frames(time = 2000) # Letting the camer adjust sensor.set_vflip(True)

This can then be combined with the following object detection code in the main loop.

while(True): clock.tick() img = sensor.snapshot() blobs = img.find_blobs([thresholdDucks], area_threshold = 1, merge = True) blob_area = 0 for blob in blobs: if blob.area() > blob_area: blob_area = blob.area() img.draw_rectangle(blob.rect(), color = (0, 255, 0)) img.draw_cross(blob.cx(), blob.cy(), color = (0, 255, 0)) blob_rel_X_pos = framew/2 - blob.cx() blob_rel_Y_pos = frameh/2 - blob.cy()

Overall Code

Here's a cohesive code snippet that combines the initialisation and object detection in the main loop:

import sensor, image, time, pyb, math from pyb import UART, LED # LED settings (1: red, 2: green, 3: blue, 4: IR) red_led = pyb.LED(1) green_led = pyb.LED(2) blue_led = pyb.LED(3) ir_leds = pyb.LED(4) output_pin = pyb.Pin("P0", pyb.Pin.OUT_PP) red_led.off() uart = UART(3, 115200, timeout_char = 1000) framew = 240 frameh = 240 motor_speed = 10 sensor.reset() # Reset and initialise the sensor sensor.set_pixformat(sensor.RGB565) # Set the pixel format to RGB565 or GRAYSCALE sensor.set_framesize(sensor.QVGA) # QVGA is 320x240 sensor.set_windowing((framew, frameh)) # Window size set to 240x240 sensor.skip_frames(time = 2000) # Letting the camer adjust sensor.set_vflip(True) # Defining the mix/max LAB values for the blobs - trying to track an orange cube in this scenario thredholdSam = (9, 40, -11, 26, -23, 43) # Rover interaction settings # Useful functions for communicating with the rover # Packet is in the format: # - Start Byte (always 0x55) # - Operation (0x00 = Read, 0x01 = Write) # - Register (0 is the register of the Motors) # - Data length (how many bytes of data) # - Data (respective to the data length) def build_and_send_packet(opCode, opType, data=None): if data is None: data = [] packet = [0x55, opCode, opType, len(data)] packet.extend(data) # Extend because we do not know the data length return packet # Takes motor speeds between -30 and 30 and writes them to the robot def write_motors(leftSpeed, rightSpeed): lSpeed = int(leftSpeed*(127/30)) rSpeed = int(rightSpeed*(127/30)) # 127 = full speed # 0 = 0 # 255 = full backwards if (lSpeed < 0): lSpeed -= 254 if (rSpeed < 0): rSpeed -= 254 packet = buildAndSendPacket(1, 0, [lSpeed, rSpeed]) uart.write(bytearray(packet)) time.sleep(0.1) def arm_robot(state): packet = buildAndSendPacket(1, 26, [state]) uart.write(bytearray(packet)) time.sleep(0.25) def turn_degrees clock = time.clock() print("Arming") time.sleep(2) # Wait for the rover to start up arm_robot(True) # Arming package while(True): clock.tick() img = sensor.snapshot() blobs = img.find_blobs([thresholdDucks], area_threshold = 1, merge = True) blob_area = 0 for blob in blobs: if blob.area() > blob_area: # Purpose of this is to find the largest blob. # If the blob was larger than the last one then we overwrite the area and # consequently also overwrite the location of the blob (i.e. how far the rover has to steer) # If the blob is not bigger, then we ignore it as it must not be the object which we are # looking to track blob_area = blob.area() img.draw_rectangle(blob.rect(), color = (0, 255, 0)) img.draw_cross(blob.cx(), blob.cy(), color = (0, 255, 0)) blob_rel_X_pos = framew/2 - blob.cx() blob_rel_Y_pos = frameh/2 - blob.cy()

Once we have successfully tracked the object's position, the next step is to convert the object coordinates into motor movement commands. There are multiple algorithms to explore for this purpose. Experimenting with different approaches will help determine the most suitable one for your specific requirements.